Why Data Cannot be Understood Scientifically

If data is so important to us, then we need to be able to understand it.

If data is so important to us, then we need to be able to understand it. The modern prejudice is that things can only be understood scientifically. But is this really true for data?

Before we get into the topic, I feel I should at least provide some scientific credentials. I studied Zoology at Oxford University – the whole range, from molecular genetics to field ecology. I had the privilege of being personally tutored by H. B. D. (“Bernard”) Kettlewell, who showed selective predation on Peppered Moth polymorphs. I have handled specimens of a Dodo and a Tasmanian Wolf, and was taught to program by Richard Dawkins. During my vacations I worked in research stations on projects devoted to terrestrial ecology and freshwater biology. My Ph.D., from Bristol University, is in experimental field ecology trying to assess the level of competition within populations of insect species.

Now back to data.

The Popular View of Data

In the popular culture, to the extent that data is thought about at all, it seems to be as something that is “scientific”. Maybe it is indirectly so, because data is housed in technology, and technology is ultimately reducible to science. Thinking about it from another angle, in recent years many people have said that they are “data-driven”. Science is all supposed to be based on data, and so “data-driven” individuals can rise above reproach by appearing to align to science – supposedly the ultimate source of all truth. Or it was, as trust in “science” now seems to be declining.

What does it mean for something like data to be scientific? It would mean that the particular peculiarities of individual items of data, should be explainable by what we know about data as a class. If I find a tick on my skin while walking in the countryside, I know that it is likely to suck my blood because I know all ticks suck blood. I can predict the behavior of the individual from my understanding of the behavior of the class.

The common conception appears to be that the same applies to data. The properties and behaviors of data as a class should apply to all the individual instances of data. And the general public seems to expect that there are experts, whether scientists or technologists, who have this knowledge which in turn enables them to manage data successfully. Of course, no expert is ever going to dispel this illusion, and no ordinary person wants to be stuck with extra work to manage data. Leave that to the specialists.

The Problem of “Why”

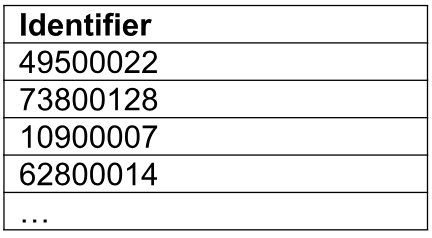

Unfortunately, it is not that simple. Let me give you an example. Many years ago I was asked to redesign a database table that housed information about financial instruments – stocks, bonds, and derivatives. Each record in the table has a identifier – a database column that that was unique for each record (so “identified” it), and was assigned automatically by the system managing the table. When I looked closely at this Identifier I saw it had a rather strange pattern. The Identifier was made up of 8 digits. The first three seemed to be random, and the following five digits seemed to be sequential numbers.

Because my task was to redesign this table I needed to understand if this strange pattern in the Identifier was important or not. I looked at all the documentation that had been created for the system and its database but could find nothing. At this point I knew I was going to be forced to replicate the pattern in the new database table I was designing. I could not prove that it was irrelevant, so I would have to keep it.

A Shocking Discovery

Then I had an idea. I started to ask all the “old timers” in the company if they knew anything about the pattern in the Financial Instrument Identifier. Eventually, I found someone who had been part of the team that had designed the table many years ago.

He told me that originally each Financial Instrument record was simply assigned a sequential number as its Identifier – 1, 2, 3, and so on for each record. However, the database software used the identifier to calculate the physical location of the data on the hard disk on which that data was stored. It did this in such a way that the value of the Identifier directly generated an address on disk, so records with Identifiers of 1, 2, 3, and so on were written onto disk physically adjacent to each other. As a result, all the data was crowded in just one place on the disk. The read/write head used to access the data would be positioned over this area of the disk all the time – and the heat is generated would burn out the disk.

To avoid this, the first 3 digits of the Identifier were changed to be random. Now the data was spread out all over the disk, so it never burned out.

I asked when this was done and was told it was 1992. I then asked why this problem was never fixed in the database software, and was told “Oh, but it was fixed within a year, but we had no reason to go back and undo the random assignment of the first 3 digits of Identifier”.

You May Never Know “Why”

This episode has haunted me until the present day. It means that how data is designed and managed is the result of decisions made for reasons that are now completely unknown, and are actually unknowable if they were never documented, or the documentation is lost, or nobody can be contacted who remembers what happened. Also, the reasons for a decision may make no sense in the modern world. Hard disk drives were dominant in 1992, but today solid state drives are much more common. They cannot get burnt out by a read/write head because they do not have read/write heads.

“Science” Does Not Apply

Which brings us back to the point that data cannot always be fully understandable based on a scientific approach. As in the Financial Instrument example, data may have been carried through different hardware upgrades and now be in an environment quite different to its original environment. But the design of that original environment may have significant impacts on data that persist to this day. Data simply cannot be understood just by inspecting the data in its current technical setting.

I am not saying that data can never be understood from using a scientific approach. There may be many cases where this is quite possible. But I think I have shown that it is not always possible.

Does It Matter?

It does, because existing data gets used for new purposes, and is often migrated through new generations of technology. The idiosyncrasies present in the original data may affect new ways in which it is used. Further, when data is migrated to a more modern technology, the idiosyncrasies are not removed, but are retained in the new environment because nobody knows why they exist, and everyone is too afraid to remove them.

The result is not sudden catastrophic failures – though that can happen - but suboptimal outcomes, a lot of additional effort required, and above all a slow sclerosis over time that impacts an organization’s ability to adapt and change. This runs counter to the notion that technology is always improving things, and we experience continual “progress”.

Can or will anything be done about this? It is very unlikely. Documentation of design decisions and technical environments seems to be at a low ebb today, and there seems to be a growing expectation that AI can do it for us, so why bother. The delusion that data can be fully understood scientifically is likely to persist.